Original post on Reddit

Hardware

Previously I posted about the used 60-bay DAS units I recently acquired and racked. Since then I've figured out the basics of using them and have them up and working.

Ceph

The current setup has one unit with 60 2TB-3TB drives attached to a single R710. The other unit has 22 2TB, 3TB, and 8TB disks. It's attached to one active R710. Although as the number of 8TB disks grows I'll be forced to attached the other two R710s to it. The reason for this is [Ceph](https://ceph.io/) OSDs need 1GB of RAM per OSD plus 1GB of RAM per TB of disk. The R710s each have 192GB of RAM in them.

Each of the OSDs is set to use one of the hard drives from the 60-bay DAS units. They also share a 70GiB hardware RAID1 array of SSDs that's used for the Bluestore Journal. This only gives each of them a few GiB of space each on it. However testing with and without this configured made a HUGE difference in performance - 15MB/s-30MB/s vs 50MB/s-300MB/s.

There is also a hardware RAID1 of SSDs that's 32GB in size. They are used for OSDs too. Those OSDs are set as SSD in CRUSH and the CephFS Metadata pool uses that space rather than HDD tagged space. This helps with metadata performance quite a bit. The only downside is that with only two nodes (for now) I can't set the replication factor above replicated,size=2,min_size=2. Once there is a third node it'll be replicated,size=3,min_size=2. This configuration will allow all but one host to fail without data loss on CephFS's metadata pool.

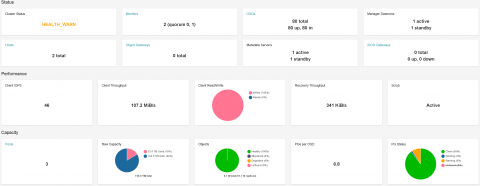

The current setup gives just over 200TiB of raw [Ceph](https://ceph.io/) capacity. Since I'm using an erasure coded pool for the main data storage (Jerasure, k=12,m=3) that should be around 150TiB of usable space. Minus some for the CephFS metadata pool, overhead, and other such things.

Real World Performance

When loading data the performance is currently limited by the 8TB drives. Data is usually distributed between OSDs proportional to free capacity on the OSD. This results in the 8TB getting the majority of the data and thus getting hit the hardest. Once 8TB disks make up the majority of the capacity it will be less of an issue. Although it's possible to change the weight of the OSDs to direct more load to the smaller ones.

The long term plan is to move 8TB drives from the SAN and DAS off of the server that's making use of this capacity as they're freed up by moving data from them to CephFS.

When the pool has been idle for a bit the performance is usually 150MB/s to 300MB/s sustained writes using two rsync processes with large, multi-GB files. Once it's been saturated for a few hours the performance has dropped down to 50MB/s to 150MB/s.

The performance with mixed read/write, small files write, small file read, and pretty much anything but big writes hasn't been tested yet. Doing that now while it's just two nodes and 80 OSDs isn't the best time. Once all the storage is migrated over - around 250TiB additional disk - it should be reasonable to produce a good baseline performance test. I'll post about that then.

Stability

I've used Ceph in the past. It was a constant problem in terms of availability and crazy bad performance. Plus it was a major pain to setup. A LOT has changed in the few years since I last used it.

This cluster has had ZERO issues since it was setup with SELinux off and `fs.aio-max-nr=1000000`. Before `fs.aio-max-nr=1000000` was changed from it's default of 500,000 there were major issues deploying all 60 OSDs. SELinux was also making things hard for the deploy process.

Deployment

Thanks to CephAdm and Docker it's super easy to get started. You basically just copy the static cephadm binary to the CentOS 7 system, have it add repos and install an RPM copy of cephadm, then setup the required docker containers to get things rolling.

Once that's done it's just a few `ceph orch` commands to get new hosts added and deploy OSDs.

Two critical gotchas I found were:

- SELinux needs to be off (maybe)

- fs.aio-max-nr needs to be greatly increased

If you don't do the `fs.aio-max-nr` part and maybe the SELinux part then you may run into issues if you have a large number of OSDs per host system. Turns out that 60-disks per host system is a lot ;)

$ cat /etc/sysctl.d/99-osd.conf

# For OSDs

fs.aio-max-nr=1000000

I chose to do my setup from the CLI. Although the web GUI works pretty well too. The file `do.yaml` below defines how to create OSDs on the server `stor60`. The tl;dr is that it uses all drives with a size at least 1TB for bluestore backed OSDs. It also uses any drives between 50GB and 100GB for the bluestore journal for those devices. The 'wal_slots' value is so that each logical volume only gets at most space for 60 of those on the WAL device.

do.yaml

service_type: osd

service_id: osd_spec_default_stor60

placement:

host_pattern: 'stor60'

data_devices:

size: '1T:'

wal_devices:

size: "50G:100G"

wal_slots: 60

Followed by

ceph orch apply osd -i do.yaml